In today’s world, algorithms are everywhere. From social media feeds to credit scoring systems, algorithms have become a ubiquitous feature of modern life. These powerful mathematical tools are used to make decisions and automate processes in a wide range of fields, from finance and healthcare to education and law enforcement. However, as the use of algorithms has become more widespread, concerns have been raised about their potential to perpetuate bias and discrimination.

As a sociologist with experience presenting on technology at international conferences, I have seen firsthand the ways in which algorithms can reproduce and amplify social inequalities. During my PhD in sociology, I presented research on the differences in internet use between different races, highlighting the ways in which access to technology is shaped by social and economic factors. In addition, I have three certificates in artificial intelligence and have worked in the startup field, where I have seen firsthand the ways in which algorithms can perpetuate bias and discrimination.

Algorithmic bias is a complex and multifaceted phenomenon, but at its core, it refers to the ways in which algorithms can reflect and amplify the biases and inequalities that exist in society. This can take many forms, from the underrepresentation of certain groups in training data to the use of biased decision rules and criteria. In some cases, algorithmic bias may be intentional, such as when algorithms are designed to discriminate against certain groups. However, in many cases, bias is unintentional and results from the inherent limitations of algorithms and the data they are based on.

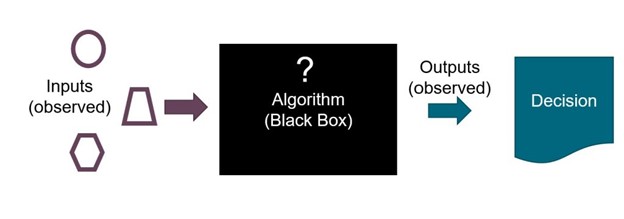

Opacity Challenge

One of the key challenges in addressing algorithmic bias is the opacity of algorithms themselves. Unlike human decision-makers, algorithms are often seen as objective and impartial, making it difficult to identify and address biases when they occur. This is particularly true for “black box” algorithms, which are complex systems that are difficult to interpret or understand. As a result, it can be difficult to determine when algorithms are biased or to hold those responsible for their use accountable.

Addressing Algorithmic Bias

Despite these challenges, there have been some efforts to address algorithmic bias in recent years. In the field of artificial intelligence, for example, there has been a growing focus on the importance of fairness, accountability, and transparency. This has led to the development of new tools and techniques for identifying and mitigating bias in algorithms, such as the use of “explainable AI” and “fairness metrics.”

However, addressing algorithmic bias requires more than just technical solutions. It requires a deeper understanding of the social and cultural contexts in which algorithms are developed and deployed. It requires an awareness of the ways in which biases and inequalities are produced and reproduced in our society, and a commitment to challenging and disrupting those patterns.

Areas of Concern

- One area where algorithmic bias has been particularly problematic is in the criminal justice system. The use of algorithms for predicting recidivism and assessing the risk of reoffending has been widely criticized for perpetuating racial biases and disparities. Studies have shown that these algorithms are more likely to falsely flag black defendants as being at a higher risk of reoffending than white defendants, even when they have comparable criminal histories. This can have serious consequences, as it can lead to harsher sentences and further entrench racial disparities in the criminal justice system.

- Another area where algorithmic bias has been a concern is in the field of employment. Many companies now use algorithms to sift through resumes and identify candidates for job interviews. However, these algorithms may be biased against certain groups, such as women and people of color. Studies have shown that these algorithms are more likely to overlook qualified candidates from these groups, perpetuating inequalities in the job market.

It is clear that addressing algorithmic bias is a complex and multifaceted challenge that requires a coordinated effort from many different stakeholders. This includes not only technical experts and policymakers, but also civil society organizations, activists, and affected communities. It requires a willingness to challenge established power structures, we must first understand the mechanisms through which they operate. In the case of algorithmic bias, this means examining the ways in which algorithms are designed and implemented, and the social contexts in which they are deployed.

Key Contributing Factors

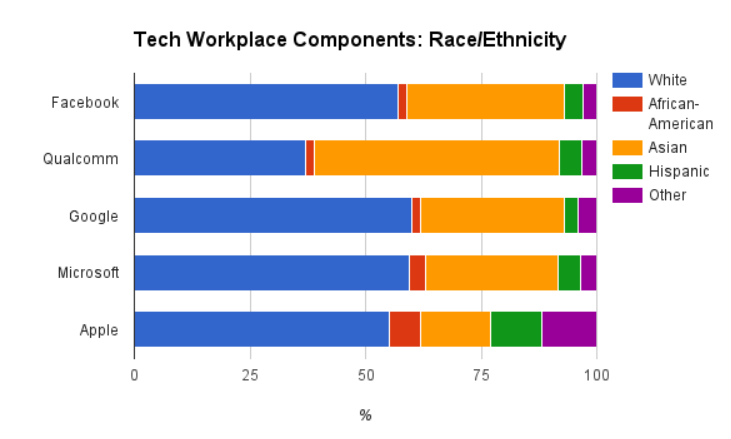

One key factor contributing to algorithmic bias is the lack of diversity among those who create and oversee algorithms. Research has consistently shown that the tech industry is dominated by white men, and this is reflected in the design of algorithms. When algorithms are created by a homogenous group of individuals with limited perspectives, biases are likely to be built into the design.

Another important factor is the data that is used to train algorithms. Algorithms are only as good as the data they are fed, and if that data is biased or incomplete, the resulting algorithm will also be biased. For example, if a facial recognition algorithm is trained on a dataset that is overwhelmingly white, it may not accurately recognize faces of people with darker skin tones. Similarly, if an algorithm is used to make lending decisions based on historical data that reflects discriminatory practices, it will perpetuate those same biases.

The deployment of algorithms in specific social contexts can also contribute to bias. For example, a predictive policing algorithm that is used to identify high-crime areas may end up targeting predominantly Black and Latino communities, perpetuating existing patterns of racial profiling and discrimination. Additionally, the use of proprietary algorithms by private companies can make it difficult for outside researchers and regulators to gain access to the data necessary to assess bias.

Steps to address algorithmic bias

- One important approach is to increase diversity among those who create and oversee algorithms. This can be done by encouraging more women and people of color to pursue careers in tech, and by ensuring that diverse perspectives are represented on algorithmic design teams.

- Another important approach is to ensure that the data used to train algorithms is diverse and representative. This may require more comprehensive data collection efforts, and greater attention to the potential biases inherent in existing datasets.

- Transparency and accountability are also critical. Algorithms should be subject to independent auditing and assessment, and companies that use algorithms should be required to be transparent about their use and the data they collect.

Ultimately, addressing algorithmic bias requires a multi-faceted approach that involves a wide range of stakeholders, including policymakers, tech companies, researchers, and civil society organizations. By working together, we can create a future in which algorithms are used in ways that promote equity and justice, rather than perpetuating existing biases and inequalities.